Introduction

The size and complexity of economic data are increasing rapidly as the economy becomes more and more digital. This presents both opportunities and challenges for analysts who want to process and interpret these data to gain insights into economic phenomena. Machine learning (ML) has emerged as a powerful tool for analyzing large and complex datasets across disciplines and could mitigate some of the challenges that the digitalization of the economy poses to economics research and analysis. ML models can effectively process large volumes of diverse data, which could allow more complex economic models to be built. As a result, the use of ML in economics research is expanding.

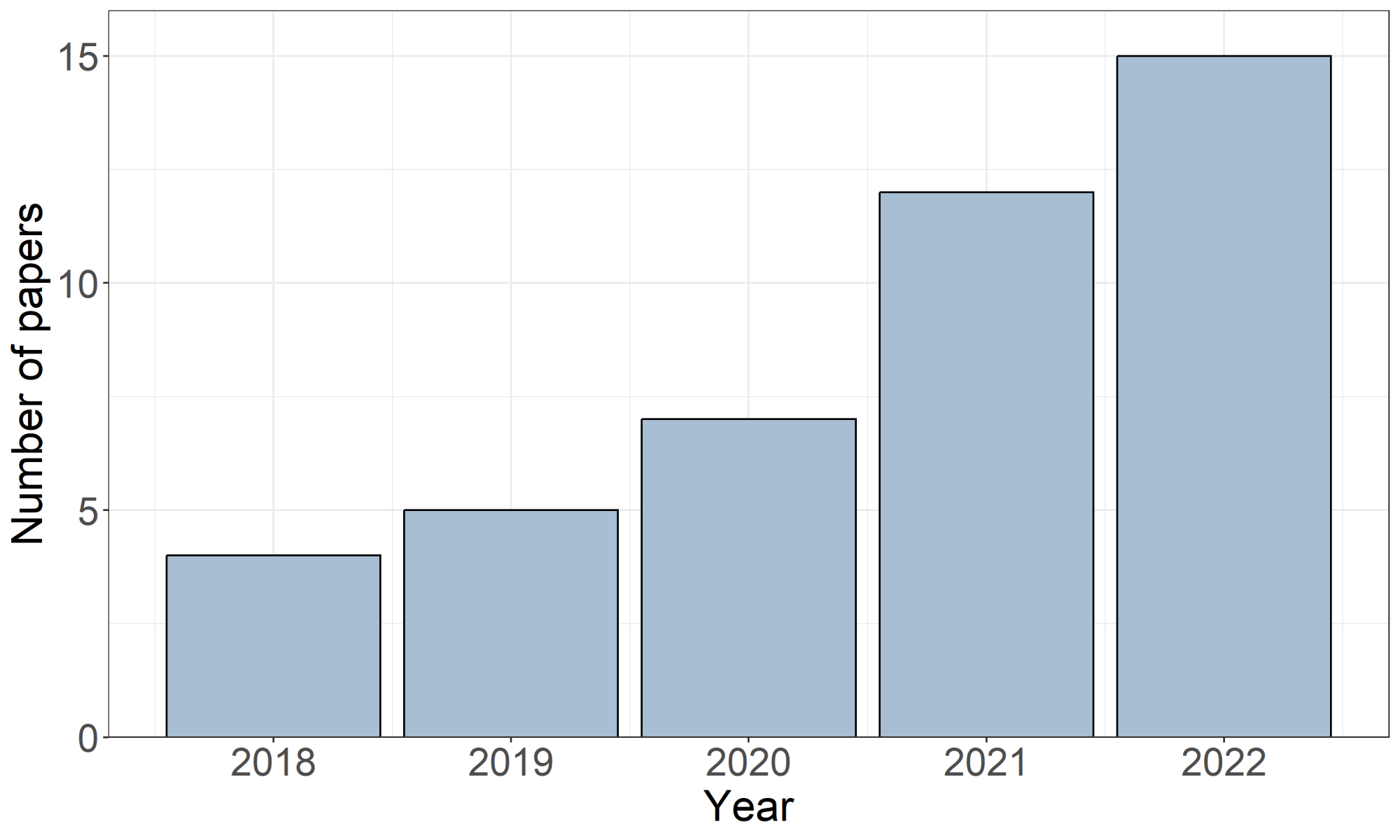

A review of articles published in 10 leading economics journals1 shows that the number of articles about ML has increased significantly in the last few years (Chart 1). This trend is expected to continue as researchers explore new ways to apply ML techniques to a range of economic problems. Nevertheless, the suitability and applicability of these tools is not widely understood among economists and data scientists. To bridge this gap, we review selected papers by authors who use ML tools and that have been published in prominent economics journals. The goal of this review is to help economists interested in leveraging ML tools for their research and analysis as well as data scientists seeking to apply their skills to economic applications.2

Chart 1: Published research papers that use machine learning, 2018–22

Chart 1: Published research papers that use machine learning, 2018–22

Note: The data include articles from the following 10 journals: American Economic Review, Econometrica, Journal of Economic Perspectives, Journal of Monetary Economics, Journal of Political Economy, Journal of Econometrics, Quarterly Journal of Economics, Review of Economic Studies, American Economic Journal: Macroeconomics, American Economic Journal: Microeconomics. The relevant papers are identified using the following search terms: machine learning, ensemble learning, deep learning, statistical learning, reinforcement learning and natural language processing.

We aim to showcase the benefits of using ML in economics research and policy analysis and offer suggestions on where, when and how to effectively apply ML models. We take a suggestive approach, rather than an explanatory one. In particular, we focus on supervised ML methods commonly used for prediction problems, such as regressions and classifications. The article is organized into three main sections, each focusing on a key question:

- when is ML used in economics

- what ML models do economists prefer

- how is ML used for economic applications

Finally, we briefly discuss the limitations of ML.

The key lessons of the review are summarized as follows:

- ML models are used to process non-traditional and unstructured data, such as text and images, and to capture strong non-linearity that is difficult to capture using traditional econometric models. ML also improves the accuracy of predictions, extracts new information and automates feature extraction from large but traditional datasets. We find that ML tools may not be useful when the data are not overly complex. In those cases, traditional econometric models would likely be sufficient.

- The choice of ML model depends on the type of application and the characteristics of the underlying data. For example, the latent Dirichlet allocation (LDA)—an ML algorithm for probabilistic topic modelling—is commonly used for textual analysis. However, deep learning, such as transformers, can also handle large amounts of text or audio data. Convolutional neural network models, such as ConvNext, are preferred for image or video datasets. Ensemble learning models are commonly used for traditional datasets, and causal ML models are used when an analysis focuses on causal inference.

- The usefulness of ML models can be greatly improved by tailoring them to the specific application, especially when dealing with a large amount of traditional, complex data. This approach is more effective than using off-the-shelf tools. Moreover, pre-trained models with transfer learning can be advantageous when dealing with a limited amount of non-traditional data and deep learning.3

Despite the potential benefits of ML, our review identifies a few challenges that must be overcome for ML to be used effectively in economics research. For instance, ML models require large amounts of data and ample computational resources. This can limit some researchers because it can be difficult to obtain high-quality data. Data also may be incomplete or biased. Additionally, ML models are prone to overfitting and can be challenging to interpret, which limits their utility. Moreover, most ML models do not have standard errors, and other statistical properties have not yet been well-defined. This can make it difficult to draw conclusions from the results. Therefore, we recommend caution when using ML models.

Despite these limitations, we find that researchers successfully use ML alongside traditional econometric tools to advance understanding of economic systems. By combining the strengths of both fields, researchers can improve the accuracy and reliability of economic analyses to better inform policy decisions.

Use of machine learning in economics

The literature suggests that ML models could add value to economic research and analysis by:

- processing non-traditional data, such as images, texts, audios and videos

- capturing non-linearity that is difficult to capture using traditional models

- processing traditional data at scale to improve the accuracy of predictions, extract new information or automate feature extraction

For instance, Varian (2014) suggests that big data, due to its sheer size and complexity, may require the more powerful manipulation tools that ML can offer. Also, ML can help with variable selections when researchers have more potential predictors (or features) than appropriate. Finally, ML techniques are handy in dealing with big data because they can capture more flexible relationships between the data than nonlinear models, potentially offering new insights.

Mullainathan and Spiess (2017) argue that the success of ML is largely due to its ability to discover complex structures in data that are not specified in advance. As well, they suggest that applying ML to economics requires finding relevant tasks where the focus is on improving the accuracy of predictions or uncovering patterns from complex datasets that can be generalized. Also, Athey and Imbens (2019) argue that ML methods have been particularly successful in big data settings that have information on a large number of features, many pieces of information on each feature, or both. They suggest that researchers using ML tools for economics research and analysis should clearly articulate their goals and explain whether certain properties of ML algorithms are important.

Processing non-traditional data

Non-traditional datasets, such as images, text, audio and video, can be difficult to process using traditional econometric models. In those cases, ML models can extract valuable information that can be incorporated into traditional economic models.

For instance, Hansen, McMahon and Prat (2018) use an ML algorithm for probabilistic topic modelling to assess how transparency—a key design feature of central banks—affects the deliberations of monetary policy-makers. Similarly, Larsen, Thorsrud and Zhulanova (2021) use a large collection of news articles and ML algorithms to investigate how the media help households form inflation expectations, while Angelico et al. (2022) use data from the X platform (formerly known as Twitter) and an ML model to measure inflation expectations. Likewise, Henderson, Storeygard and Weil (2012) use satellite images to measure growth in gross domestic product (GDP) at the sub-national and supranational regions, while Naik, Raskar and Hidalgo (2016) use a computer vision algorithm that measures the correlation between population density and household income with the perceived safety of streetscapes. Gorodnichenko, Pham and Talavera (2023) use deep learning to detect emotions embedded in US Federal Reserve press conferences and examine how those detected emotions influence financial markets. Likewise, Alexopoulos et al. (2022) use machine learning models to extract soft information from congressional testimonies. They analyze textual, audio and video data from Federal Reserve chairs to assess how they affect financial markets.

Capturing strong non-linearity

ML could be useful if the data and application contain strong non-linearity, which is hard to capture with traditional approaches. For instance, Kleinberg et al. (2018) evaluate whether ML models can help improve US judges’ decisions on granting bail. Although the outcome is binary, granting bail demands that judges process complex data to make prudent decisions.

Similarly, Maliar, Maliar and Winant (2021) use ML to solve “dynamic economic models by casting them into non-linear regression equations.” Here, ML is used to deal with multicolinearity and to perform the model reduction. Mullainathan and Obermeyer (2022) use ML to test how effective physicians are at diagnosing heart attacks. The authors utilize a large and complex dataset similar to one that physicians had available at the time of diagnosis to uncover potential sources of error in medical decisions.

Processing traditional data

ML could be useful for processing traditional datasets that are large and complex and have many variables. In such cases, the ML models can help:

- improve the accuracy of predictions

- extract new information

- automate feature extraction

For instance, Bianchi, Ludvigson and Ma (2022) combine a data-rich environment with an ML model to provide new estimates of time-varying expectational errors embedded in survey responses. They conclude that ML can be used to correct errors in human judgment and improve predictive accuracy.

Similarly, Bandiera et al. (2020) use high-frequency, high-dimensional diary data and an ML algorithm to measure the behaviour of chief executive officers. Farbmacher, Löw and Spindler (2022) use ML to detect fraudulent insurance claims using unstructured data comprising inputs of varying lengths and variables with many categories. They argue that ML alleviates the challenges that traditional methods encounter when working with these types of data. Also, Dobbie et al. (2021) suggest that the accuracy of ML-based, data-driven decisions for consumer lending could be free from bias and more accurate than examiner-based decisions.

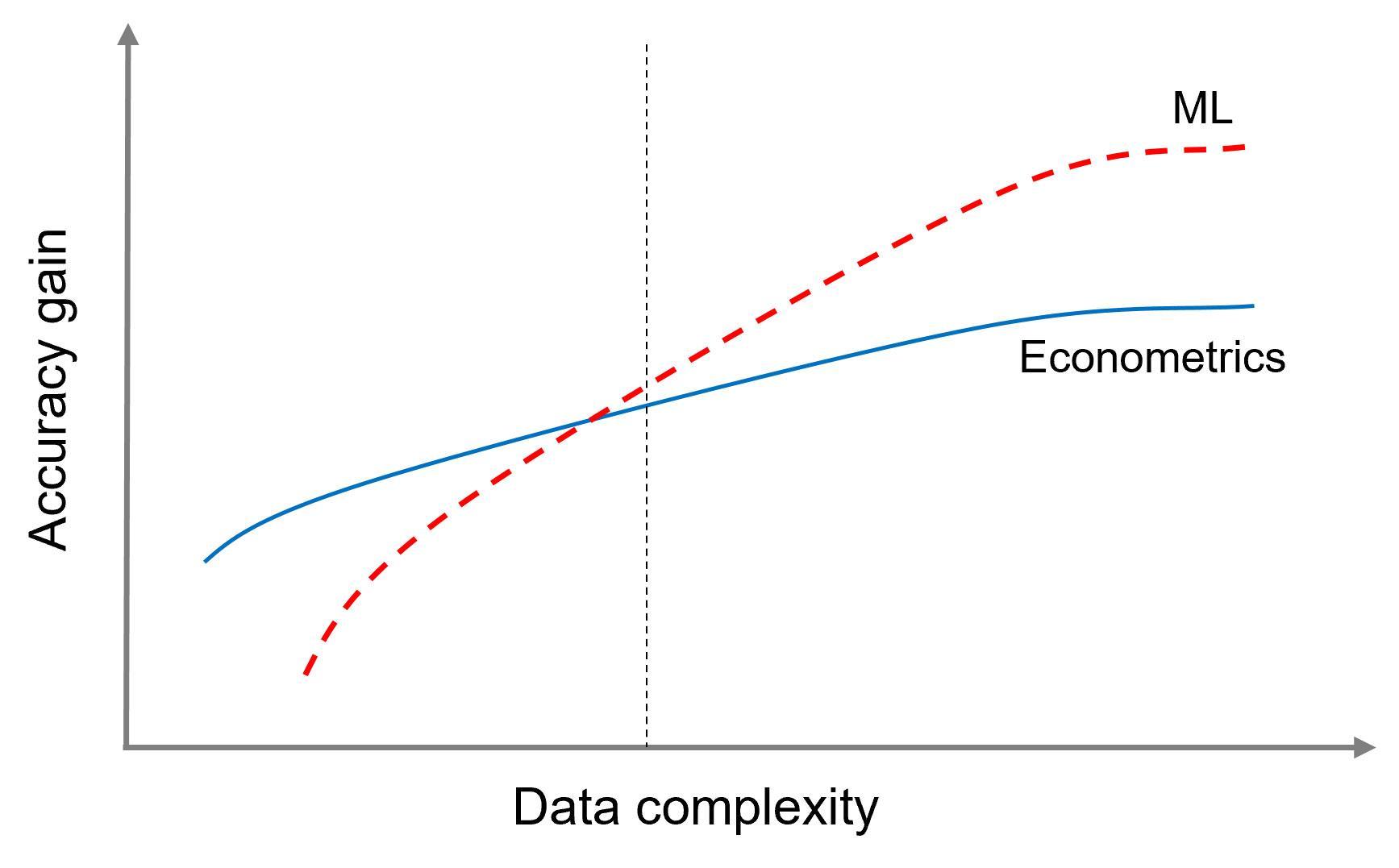

ML is probably not useful for cases where the data are not very complex. This could be related to shape, size, collinearity or non-linearity. In these cases, traditional econometric models would likely be sufficient. However, if the data become more complex, such as when dealing with big data, the value added by ML models could be higher after a certain threshold, as shown by the vertical dotted line in Figure 1.

Figure 1: Relative merits of machine learning and traditional econometric methods

Figure 1: Relative merits of machine learning and traditional econometric methods

Note: The plot is adapted from Dell and Harding (2023) and Harding and Hersh (2018).

Commonly preferred machine learning models

Different ML models are better suited for different types of applications and data characteristics. In this section, we discuss which models are most effective for a given type of economic application.

Deep learning

Natural language processing, which primarily relies on analyzing textual data, has many applications in economics.4 For instance, it could be used for topic modelling or sentiment analysis. The model of choice for topic modelling to quantify text is LDA, proposed by Blei, Ng and Jordan (2003). LDA is an ML algorithm for probabilistic topic modelling. It breaks down documents in terms of the fraction of time spent on a variety of topics (Hansen, McMahon and Prat 2018; Larsen, Thorsrud and Zhulanova 2021).

The use of deep-learning models for NLP is evolving rapidly, and various large language models could be used to process textual data. However, transformer models are more efficient at extracting valuable information from textual data (Dell and Harding 2023; Gorodnichenko, Pham and Talavera 2023; Vaswani et al. 2017). Moreover, almost all general-purpose large language models, including GPT-3 and chatGPT, are trained using generative pre-trained transformers (Brown et al. 2020).

An interesting application of computer vision models in economics is the use of a broad set of satellite images or remote sensing data for analysis. For instance, Xie et al. (2016) use satellite high-resolution images and machine learning to develop accurate predictors of household income, wealth and poverty rates in five African countries. Similarly, Henderson, Storeygard and Weil (2012) use satellite data to measure GDP growth at the sub-national and supranational level.

Donaldson and Storeygard (2016) document the opportunities and challenges in using satellite data and ML in economics. The authors conclude that models with such data have the potential to perform economic analysis at lower geographic levels and higher time frequencies than commonly available models. Various deep-learning models can extract useful information from images, including transformers (Chen et al. 2020). But the ConvNext model (Liu, Mao et al. 2022) is becoming more successful at efficiently processing image datasets (Dell and Harding 2023).

Ensemble learning

Ensemble learning models could be useful if the data are small, include many features and contain collinearity or non-linearity, which are hard to model. For instance, Mullainathan and Spiess (2017) compare the performance of different ML models in predicting house prices. They show how non-parametric ML algorithms, such as random forests, can perform significantly better than ordinary least squares, even with moderate sample sizes and a limited number of covariates.

Similarly, Mullainathan and Obermeyer (2022) use ensemble learning models that combine gradient-boosted trees and LASSO (least absolute shrinkage and selection operator), to study physicians’ decision making to uncover potential sources of errors. Also, Athey, Tibshirani and Wager (2019) propose using generalized random forests, a method for nonparametric statistical estimation. This method can be used for three statistical tasks:

- nonparametric quantile regression

- conditional average partial effect estimation

- heterogeneous treatment effect estimation using instrumental variables

Moreover, the reviewed literature shows that ensemble learning models are popular in macroeconomic prediction (Richardson et al. 2020; Yoon 2021; Chapman and Desai 2021; Goulet Coulombe et al. 2022; Bluwstein et al. 2023).

Causal machine learning

Causal ML is helpful for inferring causalities in large and complex datasets. For instance, Wager and Athey (2018) extend a random forest by developing a nonparametric causal forest for estimating heterogeneous treatment effects. The authors demonstrate that any type of random forest, including classification and regression forests, can provide valid statistical inferences. In experimenting with these models, they find causal forests to be substantially more powerful than classical methods—especially in the presence of irrelevant covariates.

Similarly, Athey and Imbens (2016) use ML to estimate heterogeneity in causal effects in experimental and observational studies. Their approach is tailored for applications where many attributes of a unit relative to the number of units observed could be observed and where the functional form of the relationship between treatment effects and the attributes is unknown. This approach enables the construction of valid confidence intervals for treatment effects, even with many covariates relative to the sample size, and without sparsity assumptions. Davis and Heller (2017) demonstrate the applicability of these methods for predicting treatment heterogeneity using applications for summer jobs.

Machine learning for economic applications

For large machine learning models applied to non-traditional data, Dell and Harding (2023) recommend using pre-trained models and transfer learning techniques. Approaches for processing non-traditional data that are based on deep learning are state-of-the-art. However, researchers face many difficulties when using them for economic applications. For instance, large amounts of data and ample computational resources are often necessary to train these models, both of which are scarce resources for economists. Moreover, these models are notoriously convoluted, and many economics researchers who would benefit from using these methods lack the technical background to implement them from scratch (Shen et al. 2021). Therefore, transfer learning, which involves adapting large models pre-trained on specific applications, could be adapted for a similar economic application.

Off-the-shelf ensemble learning models are useful when dealing with panel data that have a strong collinearity or nonlinearity, but these models should be adapted to suit the task. For instance, Athey, Tibshirani and Wager (2019) adapt the popular random forests algorithm to perform non-parametric regression or estimate average treatment effects. Recall earlier the example of Farbmacher, Löw and Spindler (2020) where the authors adapt a standard neural network to detect fraudulent insurance claims. The authors also demonstrate that the adapted model—an explainable attention network—performs better than an off-the-shelf-model. Likewise, Goulet Coulombe (2020) adapts a canonical ML tool to create a macroeconomic random forest. The author shows that the adapted model, to some extent, is interpretable and provides improved forecasting performance compared with off-the-shelf ML algorithms and traditional econometric approaches.

There are many other examples where the model or the procedure to train the model is adapted to improve performance. For instance, Athey and Imbens (2016) adopt the standard cross-validation approach to construct valid confidence intervals for treatment effects, even with many covariates relative to the sample size. Similarly, Chapman and Desai (2021) propose a variation of cross-validation approaches to improve the predictive performance of macroeconomic nowcasting models—especially during economic crises.

In their online course, Dell and Harding (2023) offer the following practical recommendations for using ML in economic applications:

- Bigger is better, both in terms of dataset and model size, when using deep-learning models for processing non-traditional data.

- The Python programming language is preferred over other languages for applied ML due to its speed and community support.

- Unix-based operating systems should be used instead of Windows systems when training large ML models.

Other emerging applications

Other types of ML approaches, such as unsupervised learning and reinforcement learning, have not made a notable impact in economics research. However, some initial applications of these approaches can be found in the literature. For instance, Gu, Kelly and Xiu (2021) use an unsupervised dimension reduction model, called an auto-encoder neural network, for asset pricing. Similarly, Triepels, Daniels and Heijmans (2017) use an auto-encoder-based unsupervised model to detect anomalies in high-value payments systems. And Decarolis and Rovigatti (2021) use data on nearly 40 million Google keyword auctions and unsupervised ML algorithms to cluster keywords into thematic groups serving as relevant markets.

Reinforcement learning (RL) models could be used to model complex strategic decisions arising in many economic applications. For instance, Castro et al. (2020) use RL to estimate optimal decision rules of banks interacting in high-value payments systems. Similarly, Chen et al. (2021) use deep RL to solve dynamic stochastic general equilibrium models for adaptive learning at the interaction of monetary and fiscal policy, while Hinterlang and Tänzer (2021) use RL to optimize monetary policy decisions. Likewise, Zheng et al. (2022) use an RL approach to learn dynamic tax policies.

Limitations

The key limitations of ML for economics research and analysis are outlined below:

- Large datasets and ample computational resources are often necessary to train ML models—especially deep learning models.

- Due to their flexibility, ML models are easy to overfit, and their complexity make them hard to interpret—which is crucial for many economic applications.

- Most ML models have no standard errors and asymptotic properties—which could be essential for many economic applications.

- ML models can be biased if the data used to train these models are biased and of low quality.

The literature is evolving to address these challenges, but some of these challenges could take longer to mitigate than others. For instance, limited data exist in many economic applications, which restricts the applicability of large ML models. This could be potentially mitigated in certain applications as the economy becomes more digital, allowing researchers to gather more data at a much higher frequency than traditional economics datasets.

Researchers are also making progress in overcoming the challenges of interpretability and explainability of models. For instance, one approach recently developed to address this issues is to use Shapley-value-based methodologies, such as those developed in Lundberg and Lee (2017) and Buckmann, Joseph and Robertson (2021). These methods are useful for macroeconomic prediction models, as shown in Buckmann, Joseph and Robertson (2021); Chapman and Desai (2021); Liu, Li et al. (2022) and Bluwstein et al. (2023). However, although such methods are based on game theory, they do not provide any optimal statistical criterion, and asymptotics for many of those approaches are not yet available. To overcome these issues, Babii, Ghysels and Striaukas (2022) and Babii et al. (2022) develop asymptotics in the context of linear regularized regressions and propose an ML-based sampling of mixed data. However, much progress needs to be made to use such asymptotic analysis for popular nonlinear ML approaches.

Conclusion

We highlight that ML is increasingly used for economics research and policy analysis, particularly for analyzing non-traditional data, capturing non-linearity and improving the accuracy of predictions. Importantly, ML can complement traditional econometric tools by identifying complex relationships and patterns in data that can then be incorporated into econometric models. As the digital economy and economic data continue to grow in complexity, ML remains a valuable tool for economic analysis. However, a few limitations need to be addressed to improve the utility of ML models, and the literature is progressing toward mitigating those challenges.

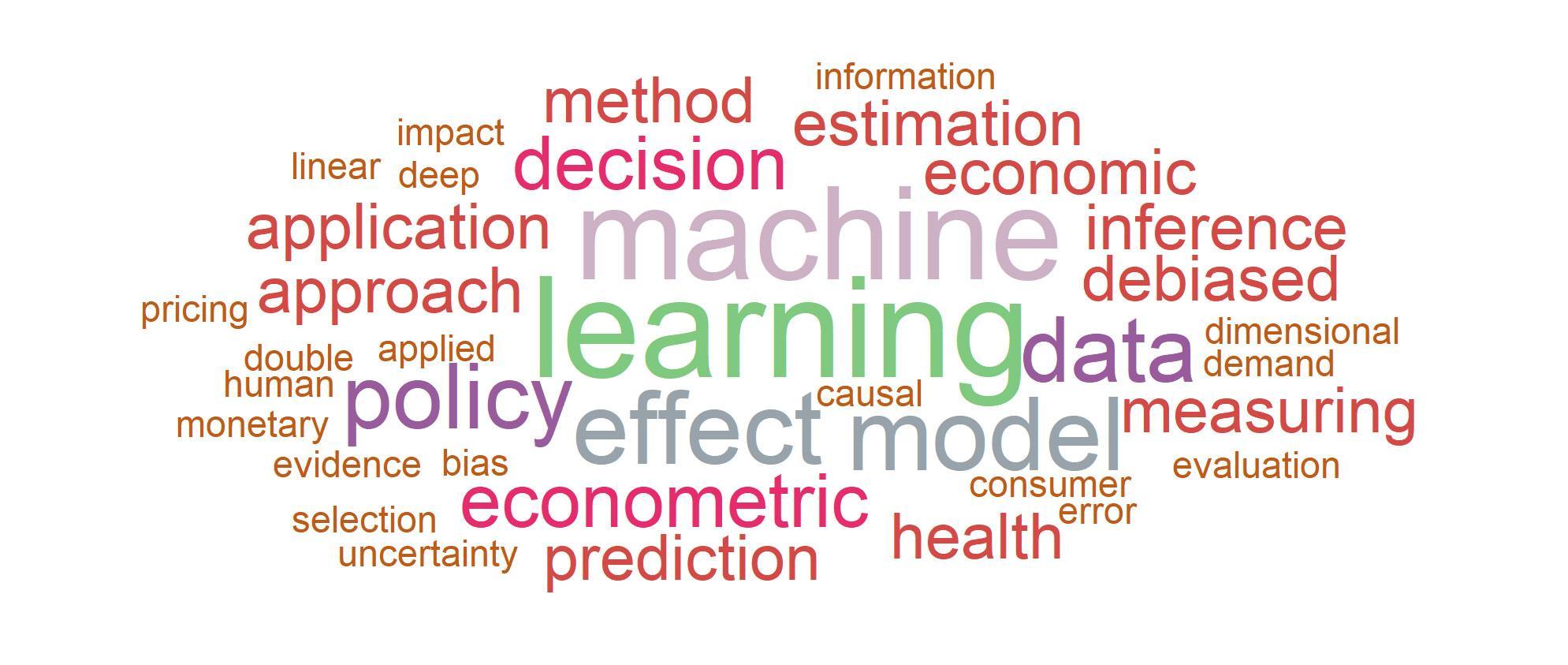

Lastly, Figure 2 presents the word clouds generated from the titles and abstracts of the articles in our dataset. These word clouds illustrate the frequency of certain terms, with larger font sizes indicating more frequent usage. For example, the terms “machine” and “learning” are prominently featured in both titles and abstracts, highlighting their relevance in those articles. They are followed by words such as “data,” “effect” and “decision.”

Figure 2: Word clouds generated from titles and abstracts in the dataset

Figure 2: Word clouds generated from titles and abstracts in the dataset

a. Titles

b. Abstracts

Note: Our dataset of articles about machine learning, collected from prominent economics journals, is visualized through word clouds of their titles and abstracts. These word clouds display term frequency, with larger fonts indicating higher usage. For instance, “machine” and “learning” appear prominently in titles and abstracts, underlining their significance.

Appendix A: Latent Dirichlet allocation

Latent Dirichlet allocation is a probabilistic ML model commonly used for topic modelling. It assumes that each text document in a corpus contains a mixture of various topics that reside within a latent layer and that each word in a document is associated with one of these topics. The model infers those topics based on the distribution of words across the entire corpus. The output of the model is a set of topic probabilities for each document and a set of word probabilities for each topic. It has many practical applications, including text classification, information retrieval and social network data analysis. Refer to Blei et al. (2003) for more details on the LDA model and its formulation.

Appendix B: Transformers

Transformers are a deep-learning model architecture commonly used in text and image processing tasks. The key feature of transformers is a self-attention mechanism to process sequential input data, such as words in a sentence. This self-attention allows the model to identify the most relevant parts of the input sequence for each output. Vaswani et al. (2017) provide more details of the model.

Generally, the architecture of a transformer comprises an encoder and a decoder consisting of multiple self-attention layers and feed-forward neural networks. The encoder processes the input sequence, such as a sentence in one language, and produces a sequence of context vectors. The decoder uses these context vectors to generate a sequence of outputs, such as translating a sentence into another language. The key benefit of transformer models is their ability to handle long-range dependencies in input sequences, making them particularly effective for NLP tasks that require understanding the context of words or phrases within a longer sentence or paragraph.

Appendix C: ConvNext

ConvNext is a convolutional neural network (CNN) model inspired by the architecture of transformers. It is a deep-learning model commonly used for processing image and video data for various tasks like object detection, image classification and facial recognition. Liu, Mao et al. (2022) shed light on the model and its formulation.

Generally, CNN models have multiple layers sandwiched between input and output layers, including convolutional, pooling and fully connected layers. In the convolutional layers, the model performs a set of mathematical operations, called a convolution, on the input image to extract high-level features such as edges, corners and textures. The pooling layers downsample the convolutional layers’ output, such as by reducing the feature maps’ size for subsequent layers. Finally, the fully connected layers classify the image based on the extracted features. One of the key benefits of CNNs is their ability to learn spatial hierarchies of features. This means that the model can identify complex patterns and objects in images by first learning to recognize simpler features and gradually building up to more complicated ones.

Appendix D: Ensemble learning

Ensemble learning is a popular ML technique that combines multiple individual models, called base models or weak learners, to improve the accuracy and robustness of an overall prediction. Each base model is trained on randomly sampled subsets of the data, and their predictions are then combined to produce a final prediction. Ensemble learning using decision trees is more popular than other models. By combining multiple decision trees trained on different subsets of the data and using different parameters, ensemble learning can capture a broader range of patterns and complex relationships in the data to produce more accurate and robust predictions.

Bagging and boosting are two commonly used ensemble learning approaches. Bagging involves creating multiple decision trees, each trained on a randomly sampled subset of the training data. The final prediction is made by combining the predictions of all the individual decision trees, such as by taking the average or majority vote of the individual tree predictions. A random forest (Breiman 2001) is a popular example of this approach. In contrast, boosting involves training decision trees sequentially, with each new tree attempting to correct the errors of the previous tree by using the modified version of the original training data. The final prediction is made by combining the predictions of all the individual decision trees, with greater weight given to the predictions of the more accurate trees. A popular example is gradient boosting (Natekin and Knoll 2013). The advanced version of boosting methods such as XGBoost (Chen et al. 2015) and LightGBM (Ke et al. 2017) are the most popular. For more, see Sagi and Rokach (2018), which details the types, applications and limitations of ensemble learning.

Appendix E: Transfer learning

Transfer learning is an ML technique that involves leveraging knowledge gained from training a model on one task to improve the performance of a model on a different but related task. By using a pre-trained model as a starting point for a new task, the model can leverage the knowledge and patterns learned from the previous task to improve its performance. This can lead to faster convergence, better generalization and improved accuracy, especially in situations where the new task has limited data available for training.

Transfer learning is particularly useful in deep learning. It is effective for large models with millions of parameters that require significant amounts of data for training. Transfer learning has been successfully applied in a wide range of tasks for processing nontraditional data. See Xie et al. (2016) for more details on the types, applications and limitations of transfer learning.

Endnotes

- 1. We review articles from the following 10 journals, which are highly cited and considered top-tier and field journals in economics: American Economic Review, Econometrica, Journal of Economic Perspectives, Journal of Monetary Eco- nomics, Journal of Political Economy, Journal of Econometrics, Quarterly Journal of Economics, Review of Economic Studies, American Economic Journal: Macroeconomics, and American Economic Journal: Microeconomics[←]

- 2. Motivation for this article comes from the American Economic Association’s (AEA) continuing education session on machine learning and big data at the 2023 AEA annual meeting (Dell and Harding 2023).[←]

- 3. See Appendix A for more details on the LDA model, Appendix B for the transformer model, Appendix C for the ConvNext model, Appendix D for ensemble learning models and Appendix E for transfer learning.[←]

- 4. Gentzkow, Kelly and Taddy (2019) review the use of text as data and ML methods for various economics applications.[←]

References

Alexopoulos, M., X. Han, O. Kryvtsov and X. Zhang. 2022. “More Than Words: Fed Chairs’ Communication During Congressional Testimonies.” Bank of Canada Staff Working Paper No. 2022-20.

Angelico, C., J. Marcucci, M. Miccoli and F. Quarta. 2022. “Can We Measure Inflation Expectations Using Twitter?” Journal of Econometrics 228 (2): 259–277.

Athey, S. and G. W. Imbens. 2016. “Recursive Partitioning for Heterogeneous Causal Effects.” Proceedings of the National Academy of Sciences 113 (27): 7353–7360.

Athey, S. and G. W. Imbens. 2019. “Machine Learning Methods That Economists Should Know About.” Annual Review of Economics 11: 685–725.

Athey, S., J. Tibshirani and S. Wager. 2019. “Generalized Random Forests.” Annals of Statistics 47 (2): 1148–1178.

Babii, A., R. T. Ball, E. Ghysels and J. Striaukas. 2022. “Machine Learning Panel Data Regressions with Heavy-Tailed Dependent Data: Theory and Application.” Journal of Econometrics, corrected proof. https://doi.org/10.1016/j.jeconom.2022.07.001

Babii, A., E. Ghysels and J. Striaukas. 2022. “Machine Learning Time Series Regressions With an Application to Nowcasting.” Journal of Business & Economic Statistics 40 (3): 1094–1106.

Bandiera, O., A. Prat, S. Hansen and R. Sadun. 2020. “CEO Behavior and Firm Performance.” Journal of Political Economy 128 (4): 1325–1369.

Bianchi, F., S. C. Ludvigson and S. Ma. 2022. “Belief Distortions and Macroeconomic Fluctuations.” American Economic Review 112 (7): 2269–2315.

Blei, D. M., A. Y. Ng and M. I. Jordan. 2003. “Latent Dirichlet Allocation.” Journal of Machine Learning Research 3: 993–1022.

Bluwstein, K., M. Buckmann, A. Joseph, S. Kapadia and O. Simsek. 2023. “Credit Growth, the Yield Curve and Financial Crisis Prediction: Evidence from a Machine Learning Approach.” Journal of International Economics, pre-proof: 103773.

Breiman, L. 2001. “Random Forests.” Machine Learning 45: 5–32.

Brown, T., B. Mann, N. Ryder, M. Subbiah, J. D. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell, S. Agarwal, A. Herbert-Voss, G. Krueger, T. Henighan, R. Child, A. Ramesh, D. Ziegler, J. Wu, C. Winter, C. Hesse, M. Chen, E. Sigler, M. Litwin, S. Gray, B. Chess, J. Clark, C. Berner, S. McCandlish, A. Radford, I. Sutskever and D. Amodei. 2020. “Language Models are Few-Shot Learners.” Advances in Neural Information Processing Systems 33: 1877–1901.

Buckmann, M., A. Joseph and H. Robertson. 2021. “Opening the Black Box: Machine Learning Interpretability and Inference Tools With an Application to Economic Forecasting.” In Data Science for Economics and Finance, edited by S. Consoli, D. Reforgiato Recupero and M. Saisana, 43–63. Cham, Switzerland: Springer Nature Switzerland AG.

Castro, P. S., A. Desai, H. Du, R. Garratt and F. Rivadeneyra. 2021. “Estimating Policy Functions in Payment Systems Using Reinforcement Learning.” Bank of Canada Staff Working Paper No. 2021-7.

Chapman, J. T. and A. Desai. 2022. “Macroeconomic Predictions Using Payments Data and Machine Learning.” Bank of Canada Staff Working Paper No. 2022-10.

Chen, M., A. Joseph, M. Kumhof, X. Pan, R. Shi and X. Zhou. 2021. “Deep Reinforcement Learning in a Monetary Model.” arXiv preprint, arXiv:2104.09368v1.

Chen, M., A. Radford, R. Child, J. Wu, H. Jun, D. Luan and I. Sutskever. 2020. “Generative Pretraining from Pixels.” In Proceedings of the 37th International Conference on Machine Learning 119: 1691–1703.

Chen, T., T. He, M. Benesty, V. Khotilovich, Y. Tang, H. Cho, K. Chen, R. Mitchell, I. Cano, T. Zhou, M. Li, J. Xie, M. Lin, Y. Geng and Y. Li. 2015. “Xgboost: Extreme Gradient Boosting.” R package version 0.4-2 1 (4): 1–4.

Davis, J. M. V. and S. B. Heller. 2017. “Using Causal Forests to Predict Treatment Heterogeneity: An Application to Summer Jobs.” American Economic Review 107 (5): 546–550.

Decarolis, F. and G. Rovigatti. 2021. “From Mad Men to Maths Men: Concentration and Buyer Power in Online Advertising.” American Economic Review 111 (10): 3299–3327.

Dell, M. and M. Harding. 2023. “Machine Learning and Big Data.” Presentation at the American Economic Association 2023 Continuing Education Program, January 8–10, New Orleans, La. https://www.aeaweb.org/conference/cont-ed/2023-webcasts

Dobbie, W., A. Liberman, D. Paravisini and V. Pathania. 2021. “Measuring Bias in Consumer Lending.” Review of Economic Studies 88 (6): 2799–2832.

Donaldson, D. and A. Storeygard. 2016. “The View from Above: Applications of Satellite Data in Economics.” Journal of Economic Perspectives 30 (4): 171–198.

Farbmacher, H., L. Löw and M. Spindler. 2022. “An Explainable Attention Network for Fraud Detection in Claims Management.” Journal of Econometrics 228 (2); 244–258.

Gentzkow, M., B. Kelly and M. Taddy. 2019. “Text as Data.” Journal of Economic Literature 57 (3): 535–574.

Gorodnichenko, Y., T. Pham and O. Talavera. 2023. “The Voice of Monetary Policy.” American Economic Review 113 (2): 548–584.

Goulet Coulombe, P. 2020. “The Macroeconomy as a Random Forest.” Available at SSRN: https://ssrn.com/abstract=3633110.

Goulet Coulombe, P., M. Leroux, D. Stevanovic and S. Surprenant. 2022. “How Is Machine Learning Useful for Macroeconomic Forecasting?” Journal of Applied Econometrics 37 (5): 920–964.

Gu, S., B. Kelly and D. Xiu. 2021. “Autoencoder Asset Pricing Models.” Journal of Econometrics 222 (1): 429–450.

Hansen, S., M. McMahon and A. Prat. 2018. “Transparency and Deliberation Within the FOMC: A Computational Linguistics Approach.” Quarterly Journal of Economics 133 (2): 801–870.

Harding, M. and J. Hersh. 2018. “Big Data in Economics.” IZA World of Labor 451. doi:10.15185/izawol.451

Henderson, J. V., A. Storeygard and D. N. Weil. 2012. “Measuring Economic Growth from Outer Space.” American Economic Review 102 (2): 994–1028.

Hinterlang, N. and A. Tänzer. 2021. “Optimal Monetary Policy Using Reinforcement Learning.” Deutsche Bundesbank Discussion Paper No. 51/2021.

Ke, G., Q. Meng, T. Finley, T. Wang, W. Chen, W. Ma, Q. Ye and T.-Y. Liu. 2017. “Lightgbm: A Highly Efficient Gradient Boosting Decision Tree.” Advances in Neural Information Processing Systems 30.

Kleinberg, J., H. Lakkaraju, J. Leskovec, J. Ludwig and S. Mullainathan. 2018. “Human Decisions and Machine Predictions.” Quarterly Journal of Economics 133 (1): 237–293.

Larsen, V. H., L. A. Thorsrud and J. Zhulanova. 2021. “News-driven Inflation Expectations and Information Rigidities.” Journal of Monetary Economics 117: 507–520.

Liu, J., C. Li, P. Ouyang, J. Liu and C. Wu. 2022. “Interpreting the Prediction Results of the Tree-based Gradient Boosting Models for Financial Distress Prediction with an Explainable Machine Learning Approach.” Journal of Forecasting 42 (5): 1037–1291.

Liu, Z., H. Mao, C.-Y. Wu, C. Feichtenhofer, T. Darrell and S. Xie. 2022. “A ConvNet for the 2020s.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition June: 11966–11976.

Lundberg, S. M. and S.-I. Lee. 2017. “A Unified Approach to Interpreting Model Predictions.” Advances in Neural Information Processing Systems 30.

Maliar, L., S. Maliar and P. Winant. 2021. “Deep Learning for Solving Dynamic Economic Models.” Journal of Monetary Economics 122: 76–101.

Mullainathan, S. and Z. Obermeyer. 2022. “Diagnosing Physician Error: A Machine Learning Approach to Low-value Health Care.” Quarterly Journal of Economics 137 (2): 679–727.

Mullainathan, S. and J. Spiess. 2017. “Machine Learning: An Applied Econometric Approach.” Journal of Economic Perspectives 31 (2): 87–106.

Naik, N., R. Raskar and C. A. Hidalgo. 2016. “Cities Are Physical Too: Using Computer Vision To Measure the Quality and Impact of Urban Appearance.” American Economic Review 106 (5): 128–132.

Natekin, A. and A. Knoll. 2013. “Gradient Boosting Machines, a Tutorial.” Frontiers in Neurorobotics 7.

Richardson, A., T. van Florenstein Mulder and T. Vehbi. 2021. “Nowcasting GDP Using Machine-Learning Algorithms: A Real-Time Assessment.” International Journal of Forecasting 37 (2): 941–948.

Sagi, O. and L. Rokach. 2018. “Ensemble Learning: A Survey.” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 8 (4): e1249.

Shen, Z., R. Zhang, M. Dell, B. C. G. Lee, J. Carlson and W. Li. 2021. “Layoutparser: A Unified Toolkit for Deep Learning Based Document Image Analysis.” In Document Analysis and Recognition — ICDAR 2021, edited by J. Lladós, D. Lopresti and S. Uchida, 131–146. Proceedings of the 16th International Conference, Lausanne, Switzerland, September 5–10.

Triepels, R., H. Daniels and R. Heijmans. 2017. “Anomaly Detection in Real-Time Gross Settlement Systems.” In Proceedings of the 19th International Conference on Enterprise Information Systems – Volume 1: ICEIS, 433–441. Porto, Portugal, April 26–29.

Varian, H. R. 2014. “Big Data: New Tricks for Econometrics.” Journal of Economic Perspectives 28 (2): 3–28.

Vaswani, A., N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser and I. Polosukhin. 2017. “Attention Is All You Need.” Advances in Neural Information Processing Systems 30: 5999–6009.

Wager, S. and S. Athey. 2018. “Estimation and Inference of Heterogeneous Treatment Effects Using Random Forests.” Journal of the American Statistical Association 113 (523): 1228–1242.

Xie, M., N. Jean, M. Burke, D. Lobell and S. Ermon. 2016. “Transfer Learning from Deep Features for Remote Sensing and Poverty Mapping.” In AAAI’16: Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, 3929–3935. Phoenix, Arizona, February 12–17.

Yoon, J. 2021. “Forecasting of Real GDP Growth Using Machine Learning Models: Gradient Boosting and Random Forest Approach.” Computational Economics 57 (1): 247–265.

Zheng, S., A. Trott, S. Srinivasa, D. C. Parkes and R. Socher. 2022. “The AI Economist: Taxation Policy Design Via Two-Level Deep Multiagent Reinforcement Learning.” Science Advances 8 (18).

Acknowledgements

The opinions here are solely those of the authors and do not necessarily reflect those of the Bank of Canada. We thank Jacob Sharples for his assistance on the project. We also thank Andreas Joseph, Dave Campbell, Jonathan Chiu, Narayan Bulusu and Stenio Fernandes for their suggestions and comments on the article.

Disclaimer

Bank of Canada staff analytical notes are short articles that focus on topical issues relevant to the current economic and financial context, produced independently from the Bank’s Governing Council. This work may support or challenge prevailing policy orthodoxy. Therefore, the views expressed in this note are solely those of the authors and may differ from official Bank of Canada views. No responsibility for them should be attributed to the Bank.

DOI : https://doi.org/10.34989/san-2023-16